This discovery project, from early in 2024, explored the potential to create a Large Language Model (LLM) AI assistant to democratise access and lower the barrier to consuming the kind of more rich and complex data we publish on behalf of the Environment Agency. The project explored how best to provide the LLMs (ChatGPT 3.5 & 4) access to the Hydrology in a way that seamlessly integrates with conversational interactions with the users and reports on its findings, both positive findings and issues that arose.

Brief Summary:

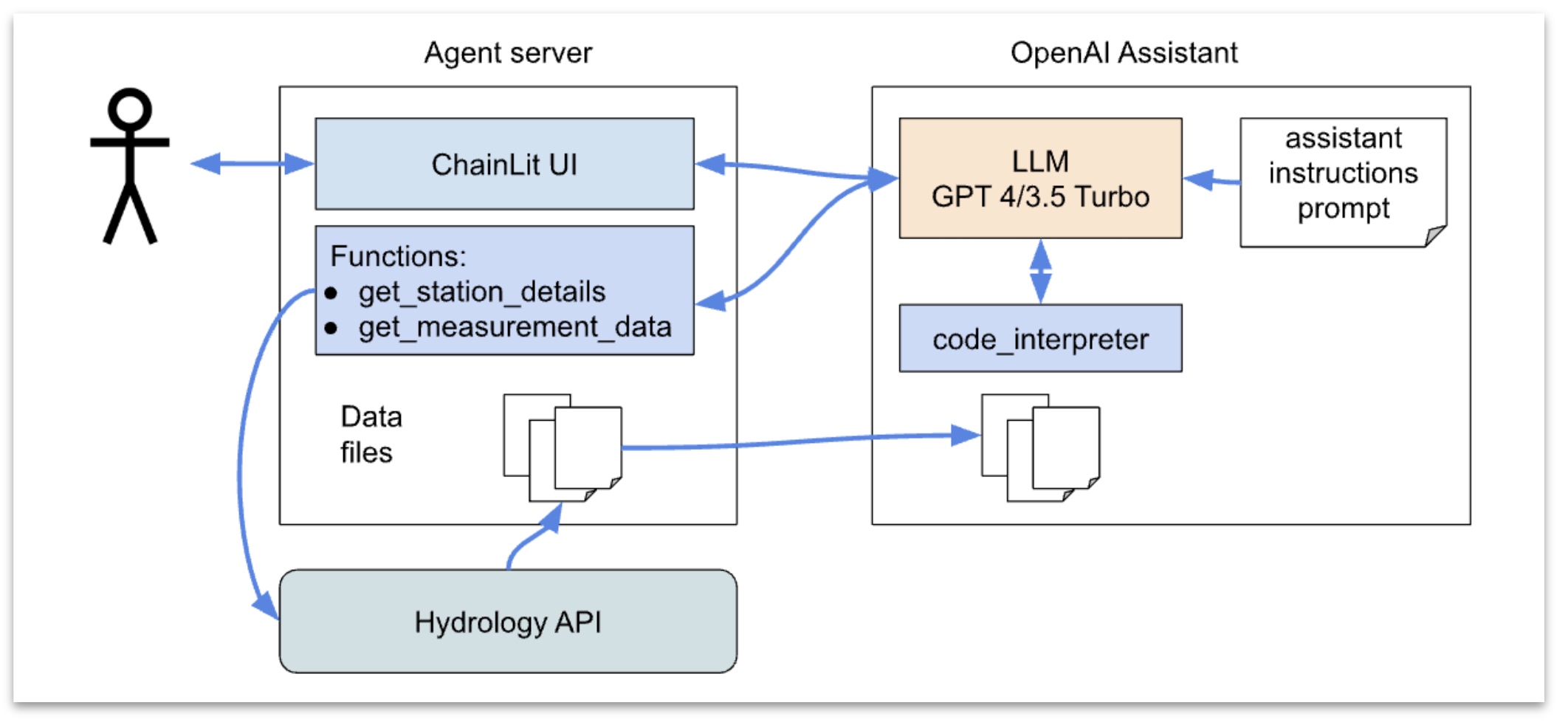

The project developed a simple architecture for integrating the EA Hydrology service API (Application Programming Interface), that provides programmatic access to the services’ data, with the ChatGPT 3.5 and 4 LLMs via some simple API access scripts, instructional prompts (assistant instruction prompt) and customised conversational interface.

The system was designed to answer questions about the Hydrology Service and data as illustrated below.

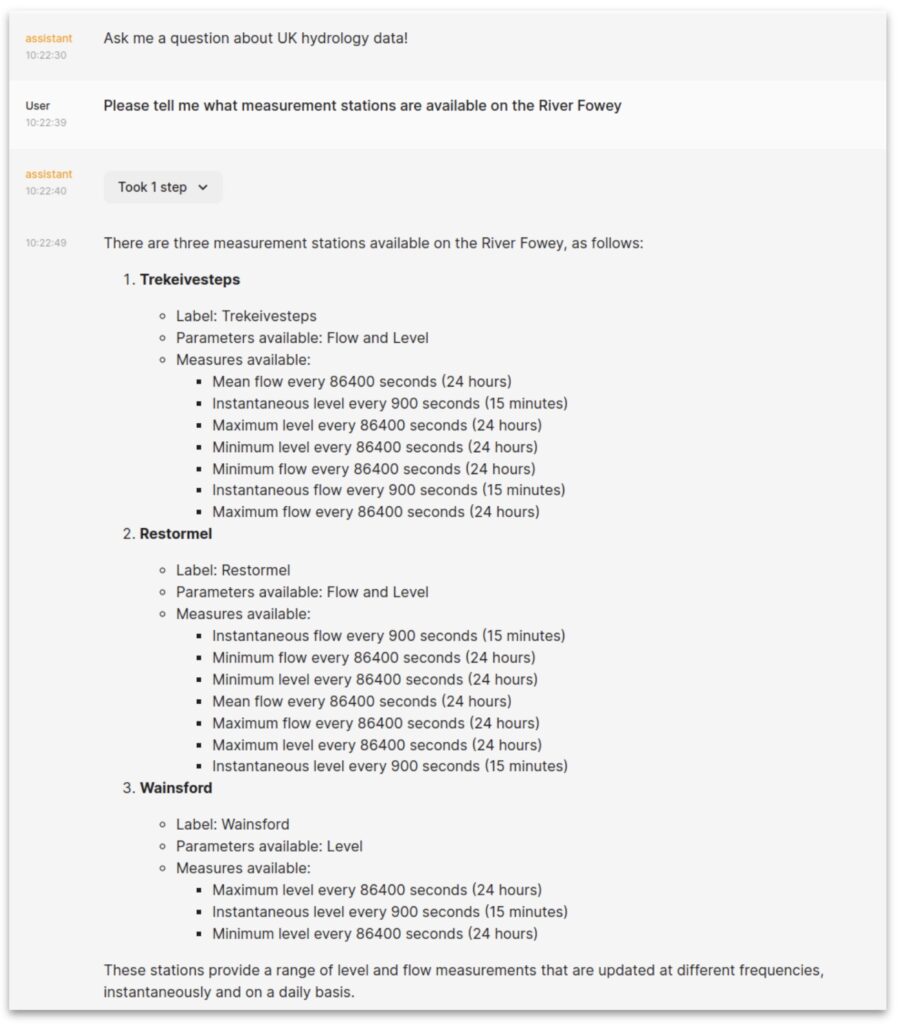

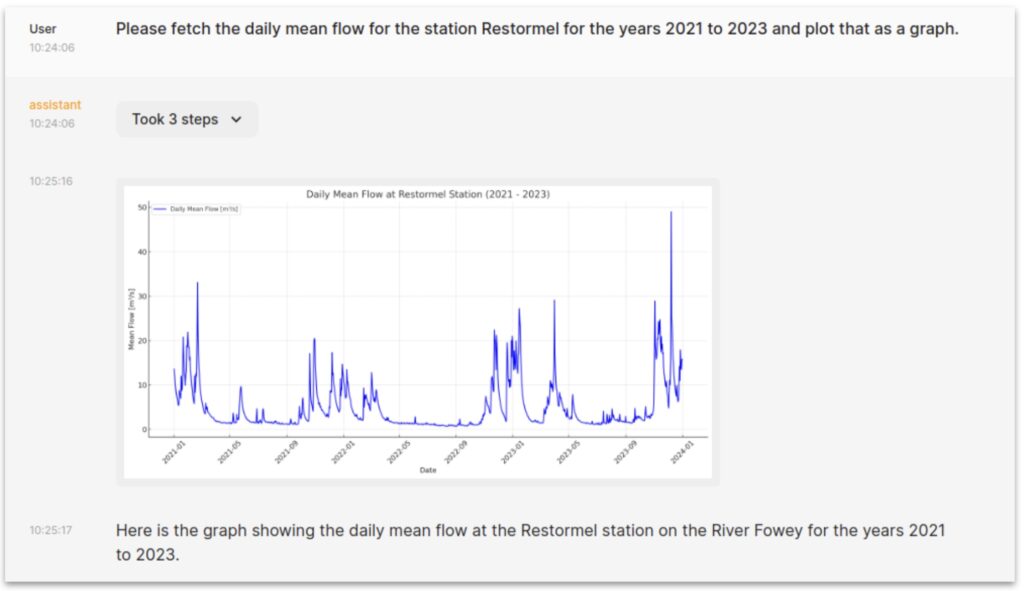

Broadly the findings were that the system illustrated the powerful potential for providing access to data and data analysis for those who are not coding experts or familiar with the hydrology API. In addition to being very flexible and adaptive, sometimes being able to spot data issues, e.g.alignment issues or missing values between two datasets, it was seen to automatically introduce sensible interpolation and realignment steps to enable the correlation calculation to go ahead. It also provided access to the details of how it processed the data.

There were also issues, for example, there was a lot of randomness in the results. What is presented from one run to another for the same type of questions could vary considerably, sometimes including full details with hyperlinks, sometimes providing a succinct summary and with widely varying formatting. More seriously it sometimes had “bad days” and seemed unable to find analysis options it was previously used. It is also possible for the LLM to ‘hallucinate’ (make up data or information). The architecture developed needed special care, e.g. in building the assistant instruction prompt, to minimise that risk. Cost was also considered, which could be significant at the time.

We also noted that conversational user interfaces will not suit all tasks, e.g. when compared to graphical and map-based user interfaces, e.g. it’s easier to ask what data is available for some area of the country by pointing to it on a map than by typing out a description of where you mean. Similar for interactive visualisation. Nevertheless, the chat interface does offer huge flexibility. The ability to ask the agent to fetch, analyse and present data via one short text prompt can be a lot easier than manually downloading data, configuring some general purpose interactive tool and learning to drive it. That’s potentially great from an accessibility point of view.

The project also highlighted future possibilities, e.g. marrying an AI assistant style with a better support for map based interactions to express what the user is interested in, and visualise some of the results, how data could be better fine-tuned to support LLM based data analysis.