Many of our projects involve the transformation of source data from a tabular format into RDF that has been aligned with classes and properties drawn from RDF vocabularies. Over time the source data, target vocabularies and the transforms themselves can be subject to change. We have a growing number of relatively generic regression checks that we now run over the generated data which have saved us from deploying broken data on several occasions. We will introduce you to a few of them in this #TechTalk article.

We’ll return in a separate article to discuss data validation through the generation of domain specific queries that directly test that the constraints expressed in the model are not violated in the data.

Simple Regression Framework

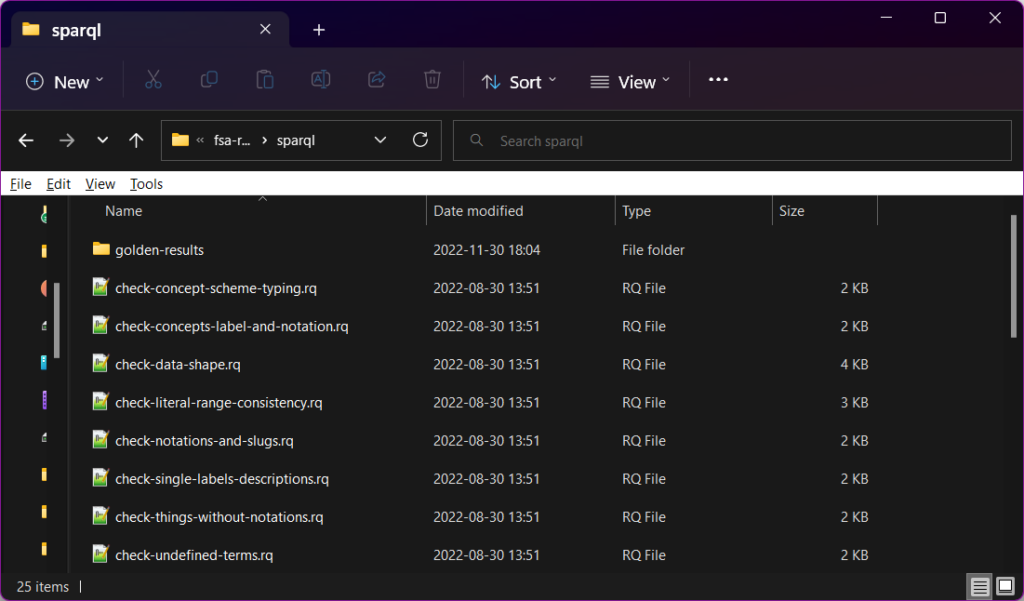

We use a shell script like the one below to run a series of SPARQL based regression tests over transformed data. We use Apache Jena RDF command-line tools, in particular tdbquery, to execute SPARQL queries on data. Over the life of a data project we tend to accumulate generally useful SPARQL queries into a ./sparql subdirectory. We prefix those intended for regression testing with the string check-. The heart of the script below iterates over the matching queries applying each to the data and either generates an initial golden-results file whose name is derived from that of the corresponding query, or if that file exists checks that the new results match those that were previously generated – such is the nature regression testing.

#! /bin/bash

# Run all the check-* queries in the /sparql sub-directory

# Create golden-results files as required or check against previous # results

# Set script to exit on error while building data

set -e

echo Rebuilding Data to Test

#

bin/make-rdf.sh

bin/make-tdb.sh

# Return code accumulator

res=0

# Allow to proceed on errors to maximise error reporting

set +e

for f in sparql/check-*.rq;

do

fn=$(basename $f)

bn=${fn%%.*}

results=sparql/golden-results/${bn}.txt

echo $bn

if [ ! -f $results ]

then

echo Making $results

tdbquery --loc=DB --set tdb:unionDefaultGraph=true --results=text --query=$f > $results

else

echo Checking $results

tdbquery --loc=DB --set tdb:unionDefaultGraph=true --results=text --query=$f | diff -b - $results

status=$?

[ $status -eq 0 ] && echo "Results Match" ||

echo "Results Differ"

#

[ $res -eq 0 ] && res=$status

fi

done

[ $res -eq 0 ] && echo "All tests passed." || echo "At least one test failed."

exit $resThis script will continue in the face of individual failures, but will only exit with a successful return code if all tests have passed. We make use of GitHub actions to systematically run the test suite as part of pull-requests and deployment commits.

So to the tests…

Each of the test queries is named to give some indication of the intention of the test.

The subsections that follow, present the most interesting and generally reusable test queries.

Undefined Things

A common mistake is to reference ‘thing’s’ about which there is no data.

This test query reports on ‘things’ that are referenced (either as the predicate or object of an RDF statement), but which are undescribed (i.e. they are not the subject of any RDF statements).

# Add any useful prefixes here

select ?focus

(sample(?s) as ?ex_subject)

(sample(?p) as ?ex_prop)

(sample(?o) as ?ex_object)

where {

{ ?s ?p ?o

BIND(?o as ?focus)

} UNION {

?s ?p ?o

BIND(?p as ?focus)

}

FILTER( !isLiteral(?focus) )

FILTER NOT EXISTS { ?focus ?f_p ?f_o }

} GROUP BY ?focus

order by str(?focus)The normal expectation for this query is that it returns an empty result set. In the case of an ‘error’ the query result indicates the undescribed ‘thing’ (?focus) and provides an example of a statement in which it is used (?ex_subject, ?ex_prop, ?ex_object) to aid in further diagnosis.

Within a given project it may be necessary to add specific filters so as to exclude some focus items from being checked. Note that the primary work of this query is done by the two filter statements at the end that exclude literal focus items and select only focus items that are not the subject of any statement.

Single valued labels and descriptions

One of the most common errors that we encounter, particularly when source data is presented in a de-normalised form, is some level of inconsistency in labels and descriptions of things. When this occurs an item may come to be blessed with multiple labels or descriptions in a given natural language (English, Welsh,…).

This query checks a number of annotation properties for multiple distinct values with the same lang tag. This should be an exceptional circumstance.

prefix dct: <http://purl.org/dc/terms/>

prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#>

prefix skos: <http://www.w3.org/2004/02/skos/core#>

prefix dct: <http://purl.org/dc/terms/>

# Add more useful prefixes here

SELECT ?s ?p ?l1 ?l2

WHERE {

values ?p { skos:prefLabel dct:description skos:definition rdfs:label }

?s ?p ?l1,?l2 .

FILTER (

isLiteral(?l1) && isLiteral(?l2) &&

(lang(?l1) = lang(?l2)) &&

(str(?l1) < str(?l2) )

)

}

order by ?s ?p ?l1 ?l2Note the use of a values statement to nominate the RDF properties being tested. The query attempts to bind multiple values, ?l1 and ?l2 for each subject and property under consideration. The filters ensure that the such values are both literals and distinct. It then triggers an ‘error’ if there is more than one value with the same valued language tag.

In this case rather than sampling, the query result contains all instances that fail the test. In our experience there are usually very few instances, and each one typically indicates an error in the source data – typically letter case, punctuation or white space differences.

Notation code and URI consistency

Some of the applications that we develop rely on there being consistency between a short code for the item, typically given as a skos:notation, and the last part of the item’s subject URI.

This test query reports on instances where the given skos:notation does NOT correspond with the final (rightmost) URI component.

prefix skos: <http://www.w3.org/2004/02/skos/core#>

# Add more useful prefixes here

select distinct ?s ?n where {

?s skos:notation ?n .

BIND(replace(str(?s), "^.*[#/]([^/#]+)$","$1") as ?slug)

FILTER (str(?n) != ?slug)

}

ORDER BY str(?s)

LIMIT 10Again the expectation is of an empty result set. Here we report only 10 results. The use of ordering ensures reported ‘errors’ are consistent between successive runs of the test. Order and limit can be adjusted to suit the diagnostic needs of the situation when failures have been reported.

Data shape introspection

One useful way to characterise an RDF dataset is by its use of properties. The query below introspects the use of properties by instances of the different classes in the associated model (which needs to be installed in the dataset being examined).

The output from this query, shaped by the select line, gives an account of each RDF/OWL property used by instances of a given RDF/OWL class, the type of the value of the given property (whether literal or an object) and the multiplicity of usage (0, 1 or >1). Note that each row in the resultset should be read as indicating the existence of class instances in the data that ‘use’ the given property with values of the given range type and multiplicity. Multiplicity values of ‘>1’ do not indicate that all values are of the same type/class, only that at least one value is of the given class. A further test on property range consistency is described in the next section.

prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#>

# Add more useful prefixes here

select distinct ?class ?p ?multivalue ?range

where {

{

{ # Select all the {?class, ?cls, ?p} combinations.

select ?class ?cls ?p

where {

{ ?cls rdfs:subClassOf* ?class .

?thing a ?cls ;

?p ?v .

# Avoid anonymous superclasses (inc. restrictions)

FILTER(!isBlank(?class))

# Limit the classes of interest

FILTER ( strstarts(str(?class), 'http://data.food.gov.uk/') )

}

} group by ?class ?cls ?p

}

# Check property usage of ?class (enumerating over subclass instances)

?thing a ?cls ;

?p ?v1 .

# Probe for object value range...

OPTIONAL {

?v1 a ?rangeType

}

# or datatype value range...

bind(if(bound(?rangeType),?rangeType,datatype(?v1)) as ?range)

# and check for more than one value.

bind(if(exists {?thing ?p ?v2 filter (?v2!=?v1) },'>1','1')

as ?multivalue )

} UNION {

{ # Select all the {?class, ?p} combinations

select ?class ?p

where {

{ ?cls rdfs:subClassOf* ?class .

?thing a ?cls ;

?p ?v .

# Avoid anonymous superclasses (inc. restrictions)

FILTER(!isBlank(?class))

# Limit the classes of interest

FILTER ( strstarts(str(?class), 'http://data.food.gov.uk/') )

}

} group by ?class ?p

}

# Look for a subclass instance that has no value for ?p

filter exists {

?cls rdfs:subClassOf* ?class .

?thing a ?cls .

filter not exists {?thing ?p ?v}

}

bind('0' as ?multivalue)

bind('-' as ?range)

}

} group by ?class ?p ?multivalue ?range

order by ?class ?p ?multivalue ?rangeFor datasets of any scale this query can take a long time to run.

The general structure of this query is as a single UNION with two branches, each branch contributing independent rows to the resultset. Each branch is headed by an inner select that enumerates all of the class, subclass (in the case of the left-hand/upper branch) and property combinations.

The left-hand (upper) branch of the UNION visits all class and subclass instances to evaluate type (?range) of property values and whether there are subject instances (?thing) that have either exactly one or more than one value for the given property (?p).

The right-hand (lower) branch of the UNION is fed with class and property combinations for which there is at least one value from the leading inner select. The filter within the block then tests for the existence of other subclass instances (?thing) that have no value for the given property (?p).

The trailing group by and order by clause ensures that only distinct values for ?class, ?p, ?multivalue and ?range are output. This results in a tabular presentation that can be read much like a class diagram and from which a class diagram could easily be sketched.

As a regression test, the shape of the data as expressed in this tabular form is seen as an invariant. It can be manually checked against the model constraints to ensure there are no violations. It would also be possible to create a utility that generated a similar table of possible data shapes from the underlying model and mechanised check that the shape generated from introspection of the data lay within the shape allowed by the model. We leave this as an exercise for ourselves (or indeed an interested reader).

Literal Range Consistency

This test query checks for consistent usage of literal valued properties. It reports, with examples, on literal valued properties where the datatypes of values differ.

Within any project there may be a few cases where this is to be expected, for example ‘stringy’ valued properties where the value may be ‘xsd:string’ or ‘rdf:langString’ or numeric values that may be any one of the numeric datatypes.

In this case the golden results file needs to be inspected by hand to ensure that the examples that have arisen are as expected. Those that are expected can be left on the golden results file and those that aren’t should be deleted. The data transform process then needs to be corrected to eliminate the erroneous results.

PREFIX dct: <http://purl.org/dc/terms/>

PREFIX skos: <http://www.w3.org/2004/02/skos/core#>

PREFIX xsd: <http://www.w3.org/2001/XMLSchema#>

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

select ?p ?vdt1 ?vdt2 #(?ex1 as ?example1) (?ex2 as ?example2) ?v1 ?v2

where

{ { select ?p ?vdt1 (sample (?s) as ?ex1) (sample(?v) as ?v1)

where

{ ?s ?p ?v

FILTER isLiteral(?v)

bind(datatype(?v) as ?vdt1)

} GROUP BY ?p ?vdt1

}

{ select distinct ?p ?vdt2 (sample(?s) as ?ex2) (sample(?v) as ?v2)

where

{ ?s ?p ?v

FILTER isLiteral(?v)

bind(datatype(?v) as ?vdt2)

} group by ?p ?vdt2

}

FILTER (str(?vdt1) < str(?vdt2))

# Both of these are 'stringy' so let them pass

FILTER ( !(?vdt1=rdf:langString && ?vdt2 = xsd:string))

}

GROUP BY ?p ?vdt1 ?vdt2 # ?ex1 ?ex2 ?v1 ?v2

ORDER BY ?p ?vdt1 ?vdt2 # ?ex1 ?ex2 ?v1 ?v2