It’s nearly the end of the year, and so a convenient time to look back at some of our project work and introspect, in a similar vein, about our core technology. So:

What has open linked-data done for us? Nothing!

Except, …

“Well, what about globally unique names?”

A common problem when combining data from multiple sources is homographs: terms that are spelled the same, but mean different things in different contexts. Two databases may have a column named lead, but one refers to a marketing lead, the other to the concentration of atomic element 82, Pb. Or the beach named Seaton occurs twice, one in Devon and one in Cornwall. A simple name like ‘Seaton’ requires context to disambiguate it, but the URI https://environment.data.gov.uk/doc/bathing-water/ukk3101-26800 refers exclusively to Seaton in Cornwall.

So, yes, globally unique identifiers, but not much else, right?

“No. Well except resolvable identifiers”

If you come across the string “Seaton” in some data, you basically have to rely on the context of where you found it to give that term meaning. But the identifier for Seaton bathing water above is an HTTP URI, which means that you can resolve it. Resolving that URI, by pasting it into a web browser or using a command-line tool like curl will return a representation of the resource, giving lots of useful metadata about what kind of thing it is. And supplied in different formats too: HTML, JSON, RDF or XML, depending how you ask.

Well, yes, obviously resolvable identifiers. But …

“And an open approach to data modelling”

With any engagement where we’re helping clients to move data into an open-linked data format, and publish it for internal or external consumption, one of the big challenges is getting the data modelling right. Data modelling involves understanding what the key concepts are in the data, what properties they have, and how they relate to each other. For example in the Environment Agency bathing water application, a water quality sample has a time and date, two bacterial concentration measures and sometimes a flag that the sample has been withdrawn. Each bathing water has a series of samples that have been taken, and overall the samples form a hypercube whose dimensions are location and date-time. Thinking about the sample itself, the latest sample for a given bathing water, the series of readings for a bathing water, and the overall hypercube, all as things we want to model and give a resolvable URI to, changes the nature of the data modelling task. It’s good discipline, and tends, in our experience, to lead to flexible data models that are more robust to changes in requirements as the project moves along.

OK, I grant open data modelling. But apart from that ..

“What about flexible data schemas”

The RDF data representation is very simple, and very flexible. The only structure is a graph, comprised of subject-predicate-object triples. That means there’s no rigidity of a fixed schema, with tables and columns that have to be migrated as the data storage requirements change. We’ve been running the bathing water service for the Environment Agency since 2011. In that time, many new requirements have come along for new data elements to be added. Significant changes have been made to the applications that the data serves. We have not once had to re-image the underlying triple store. As long as the data modelling is done well, new data just integrates nicely into the triple store.

Yes, yes, flexible data schemas are great. But other than that …

“We can easily integrate with other open linked-data?”

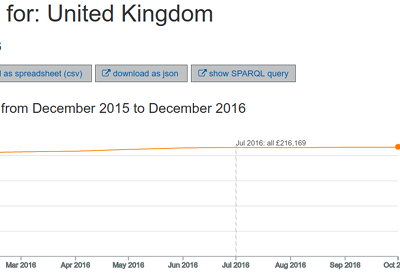

The UKHPI dataset records house price data by UK region. The regions themselves are linked to open data from both Ordnance Survey and the Office for National Statistics. So it is very easy to extract any of the location metadata or statistics published by those separate organisations, and use that data to enrich the house price index.

Yes, but apart from easy data integration …

“There’s the standard query interface”

Open linked-data is based on the W3C standard RDF, which in turn has a standard query language called SPARQL. Not only is SPARQL itself standardised, with extensive tests to ensure that conformant query processors behave the same way, it also includes a standardised HTTP (i.e. web) interface specification. We generally use the open-source Apache Jena as the underlying storage technology in our projects, but if the need arose it would be straightforward to drop-in a different SPARQL engine, perhaps one of the many commercial products, in place of the open-source tools. The SPARQL standard has also meant that we can build our own tools to process domain-specific representations of a problem into a final SPARQL query form, giving both greater simplicity to the systems downstream of that conversion, and a natural break-point at which to introduce web services into our system architectures.

Alright, fine, we have standard query interface …

“And there’s the revealing-query pattern?”

SPARQL gives us a great advantage in building linked-data applications, because it’s useful both internally to the system, and can present a query interface to the outside world. When we build a web application to present linked-data in a domain-specific, user-friendly way, we try to anticipate what information needs users of the system will have. We make those needs as easy to satisfy as we can. Inevitably, though, we will either miss some needs, or it would just make the user experience too complex to be able to answer every conceivable user’s needs. One way to mitigate that is to make direct access to the underlying data possible, by exposing the SPARQL query endpoint. But there’s a problem: SPARQL isn’t always that easy to use, and is unfamiliar to many, if not most, developers and data scientists. One pattern we’ve used in our work to alleviate this problem we call the revealing query pattern. Users interact with an application UI to select and present some information. Internally, the application translates those interactive selections into one or more SPARQL queries, and then presents the data back to the user. On request, the interface will reveal those queries, directly in a query editor. So a curious user can go from selecting and viewing data via the UI, to seeing the query underlying that view, to being able to modify and run that query and see the results. Similarly, we often allow users to reveal where internal web-service API calls are used to access the data in a more service-oriented way.

So: apart from globally unique identifiers, resolvable identifiers, an open approach to data modelling, flexible schemas, ease of integration, standard query and service interfaces and new interaction patterns, what has linked-data ever done for us? Nothing!

Happy holidays, and may 2017 bring you many discoveries and insights.